The Coming AI Revolution

Last Updated: 2022-12-17With the release of ChatGPT, on the back of Dall-E and Stable Diffusion, the internet has been buzzing. ChatGPT is not new - it is an evolution of GPT-3, which was released in 2020 and caused much interest in the tech industry but didn't really get a mention in the mainstream press. ChatGPT has changed all that, and is demonstrating that we are on the verge of a revolution for which we must prepare for both economically and in general understanding.

AI Old and New

AI began mainstream life most recognisably as Siri and Google Assistant, although it was already powering things like recommendation engines and language translation. Recommendation engines were a mixed bag however as some were large data models driven by graph-algorithms designed to pick out trends and not really AI, although it looked like AI on the surface.

Siri and Google Assistant showed that AI could be used to understand the intent of a question and then construct a suitable response. These assistants had a good 'wow' factor but anecdotal evidence around the web suggested that Siri was only really good at unlocking your iPad, setting a timer and telling a few bad jokes. A vitally important component of these was the language comprehension, which also powers many language translators around the web. These have provided a benefit to millions, and I use a Vietnamese-English translator quite regularly.

Since then, 'New' AI was bubbling away in labs and has targetted the creative world. OpenAI is probably at the forefront: Dall-E (a play on Dali and Wall-e) was released in 2021 and the world probably didn't know what to make of it - it was mostly used to generate quirky mash-ups and fantastical images. ChatGPT is the generalisation of AI encapsulated in an 'ultimate chatbot'. I have seen examples of using ChatGPT to explain the origins of the solar system, to write poems, to write code and even to write an email to contest a parking ticket.

The other area that AI has been popularised is self-driving cars. I remember betting with my friends that I think at least one of my children (currently 7 and 11) probably won't need a driving license to get around, and will instead be driven by some kind of automated service. I think for my 7-year-old this is still a pretty good bet.

The New Disruptor

Freelancing websites like Fiverr.com have thrived on small tasks that require targetted skill. These skills are generally things which are not difficult to learn and include some creativity. For example I know how to use basic drawing packages, and I can create simple pictures myself, but I don't really want to put hours of effort into designing a logo. But for hundreds of people out there, they have learned this skill and can apply it quickly and repetitively in many contexts. Other examples may be making collages in Photoshop, generating icon sets for websites or proofreading and writing copy.

These narrow-skilled services are square in the sights of upcoming AI models. I have recently seen an AI model which not only generates 3D rendered graphics, it actually generates the underlying models and textures, which means that you can then continue to fine-tune them yourself and integrate them more easily into the project you required them for. On YouTube there is a mini-industry to provide thumbnail pictures for Videos. There are also background soundtracks and title design. Content creators often want to focus on the content itself, and so outsource these tasks to others. But the new creative AI is on the verge of changing all that.

What other industries are in AI's sights? You can use AI to generate photo-realistic random faces, potentially doing away the need for a model or stock photo site. There is AI-generated music, including a whole album from artist Brian Eno. All of these industries and their creators will soon face a very important choice - position your service somehow between the customer and the AI, or face unwinnable competition of never-sleeping systems that can replicate the service in a matter of minutes, day or night.

The Importance of Understanding

This new AI is amazing, no doubt. But is it right to say that these systems are truly intelligent? Dall-E and ChatGPT are trained on vast collections of data, and at their essence they are good at two things: Understanding your commands (which in itself is amazing), and knowing how to respond with correct and relevant information in a human-like way. But underneath, it still responds based on how it is trained. There are three things which I think need to be understood about AI: that AI is a product of training data; that poor data control introduces biases; and the ethical consequences of these.

It's About the Data

The bottom line is: A model is only as good as its data. One major reason that these AI models are so good is they are trained on vast amounts of data, and I mean vast. The reason ChatGPT 'knows' so much is that it learnt from so much. But does this mean that it knows everything?

Simply put, no. Just like a human, it must first be taught, and if it isn't taught it doesn't know. When I got a chance to play with ChatGPT I threw it a few questions and I realised that it was probably able to regurgitate anything I asked it that could be found, say, on Wikipedia. It did pretty well but then I asked it what the relationship between e, i and pi was. Should be a breeze, I thought? Nope. It was happy to tell me about each component, but it was stumped to know how they are related. However when I specifically asked it about Euler's relationship it answered without hesitation.

The other thing we need to understand about this AI is whether we can be sure that the information is correct or not. In its early years, and sometimes still today, the information on Wikipedia was erroneous or at least had doubtful or debated accuracy. As such, it is actively policed, checked and corrected. AI training data is no different, but is possibly more impactful because by attaching the word "intelligence" to it may influence our level of trust. This may not be a problem with things like AI-generated images or music, but it is important for factual queries.

Some journalists have recently asked ChatGPT to make predictions, and the articles have been framed around the software's ability to do such a thing. But the main ability on display here is the way that the answers are returned in such an incredibly human-like manner. Combined with the nature of the question, it is easy to fall into the trap that the software is indeed making a prediction, much like an expert could. But it is not an expert, even if it seems to sound like one.

But data accuracy and limitations are only one side of another important issue.

Bias

Bias in intelligent systems is not new, and once again it comes down to how the system is designed or trained. In 2019 an incident in a bathroom briefly went viral on social media: Could a soap dispenser be racist? The simple answer is "no, it was bad engineering", but the more nuanced answer is "why was the technology engineered without sufficient thought about diversity?". Perhaps the biggest oversight in diversity is that between genders, which is further explored in the book Invisible Women.

There are also more subtle biases. In a conversation with a Danish friend, he revealed that there is a word in Danish ('kæreste', which is a gender-neutral word for 'romantic partner'). However if you associated this with different adjectives or actions it was translated to either boyfriend or girlfriend, apparently depending on the context.

This is where closed and open systems will come into play. ChatGTP is open, but Google's AI is closed. In closed systems, will it be possible to validate the training data? Will some AI be created with a political, racial or other behavioural leaning? And will we be able to tell? We have already seen recommendation algorithms create 'echo chambers' where you are fed news stories and opinions similar to those you have seen before. Meta is also working on AI - If it is being trained on data from Facebook, who knows what kind of biases we will get.

Ethics

The creators of GPT-3 were aware that the AI could be asked to do unethical things, so when they released ChatGPT they implemented some boundaries to what you can ask it, as is described in their content policy (it will reject attempts to request hateful, violent, shocking or illegal material). However human ingenuity is still ahead of this curve, and ChatGPT can still be mislead and tricked into being unethical. One amusing way was for a user to ask it to write a play which contained a haiku in praise of meth-amphetamine. The OpenAI CEO has already clarified that ChatGPT is still experimental and should not be used for serious purposes. But how many are listening?

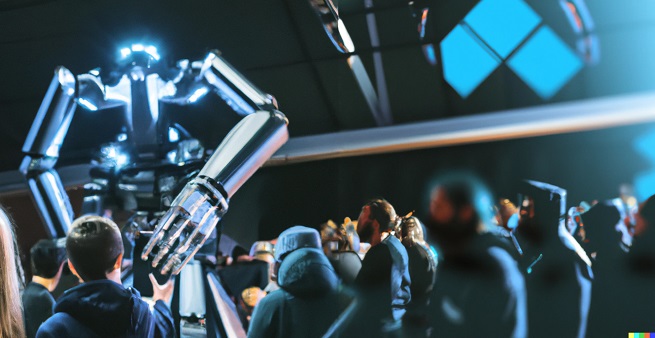

What about Dall-E? Can it be asked, for example, to generate pictures which may be used for potentially violent propaganda? I attempted to generate some art which might push the boundaries of this. You might say that what I got was editorial-quality (above), but with enough resources, time and a bit of photoshop it would be possible to at least speed up the production of these type of images. The machine cannot know what the images are being created for, so a certain amount of ethical control must lie outside of the algorithm.

There are also other ethics at play, predominantly that of copyright. While you can say that the computer has generated text or produced an image 'on its own', the origins of these creations will sometimes bleed through into the content. When I was playing with Dall-E, some of its output (notably the picture with the American flag) contained possibly recognisable human figures. Stock photo sites such as iStock and Getty have strict policies of obtaining agreement from a model for their 'recognisable image' to be used for commercial purposes. When you dig into 'recognisable', this does not only mean that their face is visible. So what happens if a model discovers their recognisable form in a generated image?

Text, and especially code, present a much more black-and-white view of this. It is not unreasonable to say that generated code or prose will contain recognisable snippets or passages which may be subject to copyright. There is currently a lawsuit that has been brought against GitHub CoPilot which argues this case.

Next generation

So where do we go from here? What are the positives? Assuming the issues above are solvable then I see that biggest benefits to be pairing AI with other technology. Most media coverage seems to position the new AI as a tool for assisting people and increasing productivity, and I agree with this. In fact, nearly all the images that you see on this page were generated using Dall-E*. But when AI is combined with another technology is when we will see the most benefit.

The most obvious example is Tesla and self-driving cars (vehicles + AI). Self-driving trucks are already under development, and could present a revolution for freight and haulage. One exciting prospect I am watching closely is at NVIDIA: while DeepFake technology is treading the line between entertainment and propaganda, NVIDIA are adapting the technology and combining it with Video Conferencing to allow people to conduct remote meetings with 10x less bandwidth. The current results are amazing.

What other combinations can be thought of? What combinations will unlock the most benefit for humans? And what combinations should we avoid? (I'm looking at you Neuralink.)

Cautiously to The Future

We are indeed on the verge of a revolution in the field of artificial intelligence. As such, it is important that people approach AI with a sense of education and understanding about what it means and the potential risks that it presents. This includes being aware of issues such as hidden bias in AI algorithms and the ethical implications of its applications. By taking the time to educate ourselves about AI, we can better navigate the rapidly changing landscape of technology and ensure that we are using it in a way that is responsible and beneficial for society as a whole.

And yes, that last paragraph was written by ChatGPT.

[* The only one which isn't is the assortment of 3D models, which was taken from NVIDIA]